Regression analysis is a widely used set of statistical analysis methods for gauging the true impact of various factors on specific facets of a business. These methods help data analysts better understand relationships between variables, make predictions, and decipher intricate patterns within data. Regression analysis enables better predictions and more informed decision-making by tapping into historical data to forecast future outcomes. It informs the highest levels of strategic decision-making at the world’s leading enterprises, enabling them to achieve successful outcomes at scale in virtually all domains and industries. In this article, we delve into the essence of regression analysis, exploring its mechanics, applications, various types, and the benefits it brings to the table for enterprises that invest in it.

What is Regression Analysis?

Enterprises have long sought the proverbial “secret sauce” to increasing revenue. While a definitive formula for boosting sales has yet to be discovered, powerful advances in statistics and data science have made it easier to grasp relationships between potentially influential factors and reported sales results and earnings.

In the world of data analytics and statistical modeling, regression analysis stands out for its versatility and predictive power. At its core, it involves modeling the relationship between one or more independent variables and a dependent variable—in essence, asking how changes in one correspond to changes in the other.

How Does Regression Analysis Work?

Regression analysis works by constructing a mathematical model that represents the relationships among the variables in question. This model is expressed as an equation that captures the expected influence of each independent variable on the dependent variable.

End-to-end, the regression analysis process consists of data collection and preparation, model selection, parameter estimation, and model evaluation.

Step 1: Data Collection and Preparation

The first step in regression analysis involves gathering and preparing the data. As with any data analytics, data quality is imperative—in this context, preparation includes identifying all dependent and independent variables, cleaning the data, handling missing values, and transforming variables as needed.

Step 2: Model Selection

In this step, the appropriate regression model is selected based on the nature of the data and the research question. For example, a simple linear regression is suitable when exploring a single predictor, while multiple linear regression is better for use cases with multiple predictors. Polynomial regression, logistic regression, and other specialized forms can be employed for various other use cases.

Step 3: Parameter Estimation

The next step is to estimate the model parameters. For linear regression, this involves finding the coefficients (slopes and intercepts) that best fit the data. This is more often accomplished using techniques like the least squares method, which minimizes the sum of squared differences between observed and predicted values.

Step 4: Model Evaluation

Model evaluation is critical for determining the model’s goodness of fit and predictive accuracy. This process involves assessing such metrics as the coefficient of determination (R-squared), mean squared error (MSE), and others. Visualization tools—scatter plots and residual plots, for example—can aid in understanding how well the model captures the data’s patterns.

Interpreting the Results of Regression Analysis

In order to be actionable, data must be transformed into information. In a similar sense, once the regression analysis has yielded results, they must be interpreted. This includes interpreting coefficients and significance, determining goodness of fit, and performing residual analysis.

Interpreting Coefficients and Significance

Interpreting regression coefficients is crucial for understanding the relationships between variables. A positive coefficient suggests a positive relationship; a negative coefficient suggests a negative relationship.

The significance of coefficients is determined through hypothesis testing—a common statistical method to determine if sample data contains sufficient evidence to draw conclusions—and represented by the p-value. The smaller the p-value, the more significant the relationship.

Determining Goodness of Fit

The coefficient of determination—denoted as R-squared—indicates the proportion of the variance in the dependent variable explained by the independent variables. A higher R-squared value suggests a better fit, but correlation doesn’t necessarily equal causation (i.e., a high R-squared doesn’t imply causation).

Performing Residual Analysis

Analyzing residuals helps validate the assumptions of regression analysis. In a well-fitting model, residuals are randomly scattered around zero. Patterns in residuals could indicate violations of assumptions or omitted variables that should be included in the model.

Key Assumptions of Regression Analysis

For regression analysis to yield reliable and meaningful results, regression analysis relies on assumptions of linearity, independence, homoscedasticity, normality, and no multicollinearity in interpreting and validating models.

- Linearity. The relationship between independent and dependent variables is assumed to be linear. This means that the change in the dependent variable is directly proportional to changes in the independent variable(s).

- Independence. The residuals—differences between observed and predicted values—should be independent of each other. In other words, the value of the residual for one data point should not provide information about the residual for another data point.

- Homoscedasticity. The variance of residuals should remain consistent across all levels of the independent variables. If the variance of residuals changes systematically, it indicates heteroscedasticity and an unreliable regression model.

- Normality. Residuals should follow a normal distribution. While this assumption is more crucial for smaller sample sizes, violations can impact the reliability of statistical inference and hypothesis testing in many scenarios.

- No multicollinearity. Multicollinearity—a statistical phenomenon where several independent variables in a model are correlated—makes interpreting individual variable contributions difficult and may result in unreliable coefficient estimates. In multiple linear regression, independent variables should not be highly correlated.

Types of Regression Analysis

There are many regression analysis techniques available for different use cases. Simple linear regression and logistic regression are well-suited for most scenarios, but the following are some of the other most commonly used approaches.

| Simple Linear Regression | Studies relationship between two variables (predictor and outcome) |

| Multiple Linear Regression | Captures impact of all variables |

| Polynomial Regression | Finds and represents complex patterns and non-linear relationships |

| Logistic Regression | Estimates probability based on predictor variables |

| Ridge Regression | Used in cases with high correlation between variables; can also be used as a regularization method for accuracy |

| Lasso Regression | Used to minimize effect of correlated variables on predictions |

Common types of regression analysis.

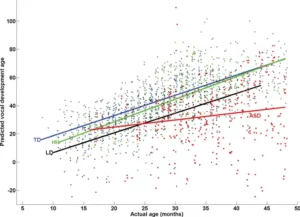

Simple Linear Regression

Useful for exploring the relationship between two continuous variables in straightforward cause-and-effect investigations, simple linear regression is the most basic form of regression analysis. It involves studying the relationship between two variables: an independent variable (the predictor) and a dependent variable (the outcome).

Source: https://upload.wikimedia.org/wikipedia/commons/b/b0/Linear_least_squares_example2.svg

Multiple Linear Regression (MLR)

MLR regression extends the concept of simple linear regression by capturing the combined impact of all factors, allowing for a more comprehensive analysis of how several factors collectively influence the outcome.

Source: https://cdn.corporatefinanceinstitute.com/assets/multiple-linear-regression.png

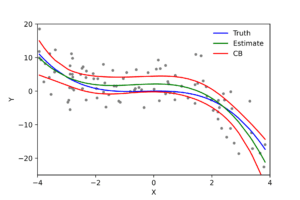

Polynomial Regression

For non-linear relationships, polynomial regression accommodates curves and enables accurate representation of complex patterns. This method involves fitting a polynomial equation to the data, allowing for more flexible modeling of complex relationships. For example, a second order polynomial regression—also known as a quadratic regression—can be used to capture a U-shaped or inverted U-shaped pattern in the data.

Source: https://en.wikipedia.org/wiki/Polynomial_regression#/media/File:Polyreg_scheffe.svg

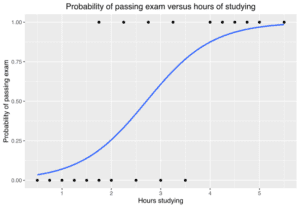

Logistic Regression

Logistic regression estimates the probability of an event occurring based on one or more predictor variables. In contrast to linear regression, logistic regression is designed to predict categorical outcomes, which are typically binary in nature—for example, yes/no or 0/1.

Source: https://en.m.wikipedia.org/wiki/File:Exam_pass_logistic_curve.svg

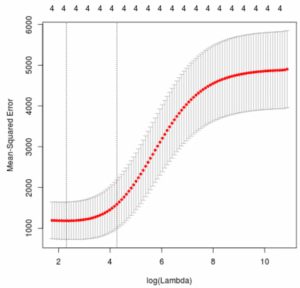

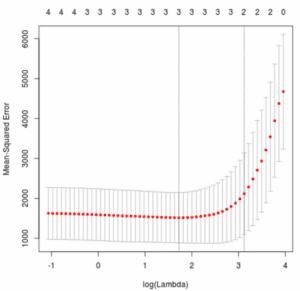

Ridge Regression

Ridge regression is typically employed when there is a high correlation between the independent variables. This powerful regression method yields models that are less susceptible to overfitting, and can be used as regularization methods for reducing the impact of correlated variables on model accuracy.

Source: https://www.statology.org/ridge-regression-in-r/

Lasso Regression

Like ridge regression, lasso regression—short for least absolute shrinkage and selection operator—works by minimizing the effect that correlated variables have on a model’s predictive capabilities.

Source: https://www.statology.org/lasso-regression-in-r/

Regression Analysis Benefits and Use Cases

Because it taps into historical data to forecast future outcomes, regression analysis enables better predictions and more informed decision-making, giving it tremendous value for enterprises in all fields. It’s used at the highest levels of the world’s leading enterprises in fields from finance to marketing to help achieve successful outcomes at scale.

For example, regression analysis plays a crucial role in the optimization of transportation and logistics operations. By predicting demand patterns, it allows enterprises to adjust inventory levels and optimize their supply chain management efforts. It can also help optimize routes by identifying factors that influence travel times and delivery delays, ultimately leading to more accurate scheduling and resource allocation, and assists in fleet management by predicting maintenance needs.

Here are other examples of how other industries use regression analysis:

- Economics and finance. Regression models help economists understand the interplay of variables such as interest rates, inflation, and consumer spending, guiding monetary strategy and policy decisions and economic forecasts.

- Healthcare. Medical researchers employ regression analysis to determine how factors like age, lifestyle choices, genetics, and environmental factors contribute to health outcomes to aid in the design of personalized treatment plans and mechanisms for predicting disease risks.

- Marketing and business. Enterprises use regression analysis to understand consumer behavior, optimize pricing strategies, and evaluate marketing campaign effectiveness.

Challenges and Limitations

Despite its power, regression analysis is not without challenges and limitations. For example, overfitting occurs when a model is too complex and fits the noise in the data, rather than the underlying patterns, or multicollinearity can lead to unstable coefficient estimates.

To deal with these issues, methods such as correlation analysis, variance inflation factor (VIF), and principal component analysis (PCA) can be used to identify and remove redundant variables. Regularization methods using additional regression techniques—ridge regression, lasso regression, and elastic net regression, for example—can help to reduce the impact of correlated variables on the model’s accuracy.

Inherently, regression analysis methods assume that relationships are constant across all levels of the independent variables. But this assumption might not hold true in all cases. For example, modeling the relationship between an app’s ease-of-use and subscription renewal rate may not be well-represented by a linear model, as subscription renewals may increase exponentially or logarithmically with the level of usability.

Bottom Line: Regression Analysis for Enterprise Use

Regression analysis is an indispensable tool in the arsenal of data analysts and researchers. It allows for the decoding of hidden relationships, more accurate outcome predictions, and revelations hidden inside intricate data dynamics that can aid in strategic decision-making.

While it has limitations, many of them can be minimized with the use of other analytical methods. With a solid understanding of its mechanisms, types, and applications, enterprises across nearly all domains can harness its potential to extract valuable information.

Doing so requires investment—not just in the right data analytics and visualization tools and expertise, but in a commitment to collect and prepare high quality data and train staff to incorporate it into decision-making processes. Regression analysis should be just one of the arrows in a business’s data analytics and data management quiver.

Read about the 6 Essential Techniques for Data Mining to learn more about how enterprise data feeds regression analysis to make predictions.